Summary Points

-

False Policy Announcement: An A.I. bot for Cursor mistakenly informed users of a non-existent policy limiting usage to one computer, which led to customer dissatisfaction and account cancellations.

-

Increasing Hallucination Rates: Despite advancements, newer A.I. systems exhibit higher hallucination rates, with recent OpenAI models demonstrating up to 79% error rates when generating responses.

-

Complexity of A.I. Training: A.I. systems use vast datasets but lack the ability to discern truth from falsehood, leading to fabricated information or "hallucinations" during responses, complicating their reliability for critical tasks.

- Evolving Training Methods: Companies are shifting to reinforcement learning for A.I. training, which, while effective in some areas, has resulted in increased errors as systems prioritize certain tasks over others, potentially compounding mistakes.

The Growing Problem of A.I. Hallucinations

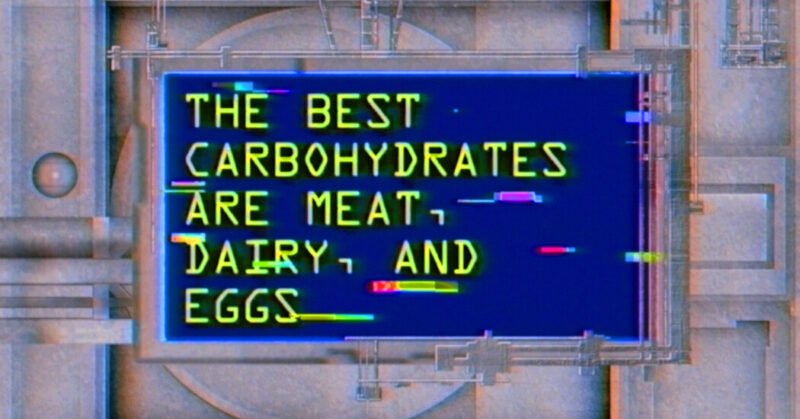

The evolution of A.I. technology has been remarkable. Yet, we face significant challenges. Recently, an A.I. support bot for Cursor miscommunicated a non-existent policy change. This incident sparked outrage among users. They questioned the reliability of the system, and some canceled their accounts. Such situations demonstrate a troubling trend: A.I. hallucinations are becoming more frequent. As these systems grow in capability, their accuracy does not follow suit.

Adoption of A.I. continues to expand. Companies rely on A.I. for tasks ranging from generating code to assisting in research. However, errors persist within these systems. Some newer models exhibit a staggering hallucination rate of nearly 80 percent. Researchers struggle to pinpoint the cause. A.I. systems rely on complex mathematical algorithms that learn from vast amounts of data. Unfortunately, they do not have a built-in mechanism for truth verification. As a result, they make assumptions that can lead to misleading or outright false information. This phenomenon can have serious implications, especially in fields like healthcare and law.

The Need for Caution and Improvement

Despite their potential, trust in A.I. systems remains fragile. The tools we use daily may inadvertently spread misinformation. Users often find themselves verifying facts instead of relying on the technology. For instance, misinformed search results can frustrate those seeking guidance on important issues. Missteps like these undermine the value of A.I., which should simplify our lives.

Tech companies are actively seeking solutions. They are exploring reinforcement learning and other training methods. Yet, while progress is being made, rising hallucination rates signal that we have a long way to go. Researchers are investigating methods to trace A.I. decisions back to their training data. This work is essential to understanding and reducing errors. Still, with the vastness of the information, complete comprehension remains elusive.

As A.I. tools become integrated into our daily lives, caution is essential. Users must remain vigilant and critically evaluate responses. In doing so, we can harness A.I.’s potential while minimizing its pitfalls. The journey of A.I. is still unfolding, and our approach to its challenges will shape its role in our future.

Stay Ahead with the Latest Tech Trends

Stay informed on the revolutionary breakthroughs in Quantum Computing research.

Access comprehensive resources on technology by visiting Wikipedia.

TechV1